To meet the demand for high-resolution wavefront detection in next-generation large-aperture telescopes, the Shack-Hartmann wavefront sensor (SHWFS) has long faced the challenge of a trade-off between spatial sampling rate and signal-to-noise ratio.

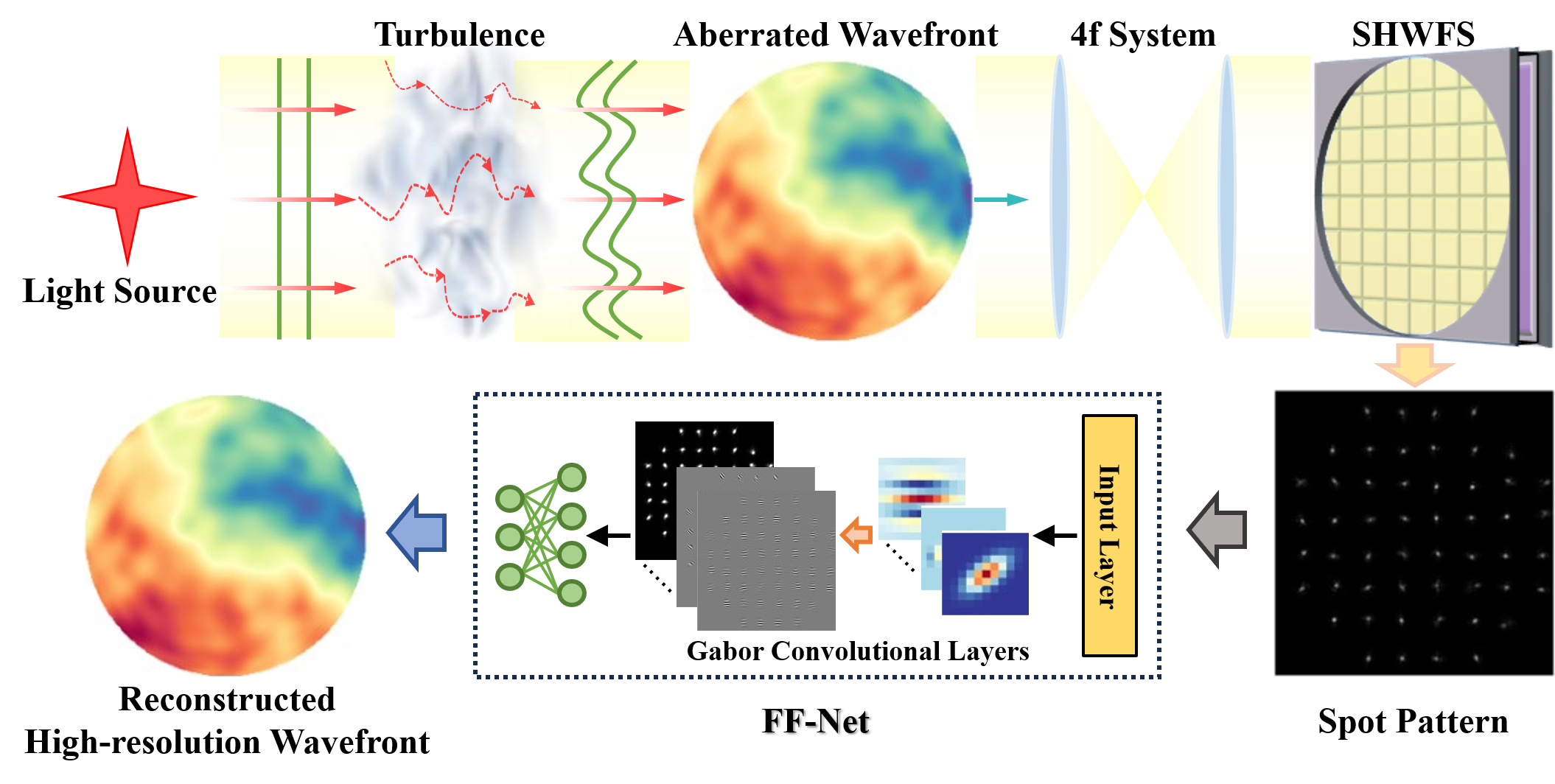

Recently, Prof. Heng Zuo's team at the Nanjing Institute of Astronomical Optics & Technology, Chinese Academy of Sciences proposed a super-resolution wavefront reconstruction method based on a Frequency-domain Filter-based Neural Network (FF-Net, Fig.1).

This approach successfully achieves high-precision, high-resolution wavefront reconstruction under spatially undersampled conditions without modifying the hardware configuration.

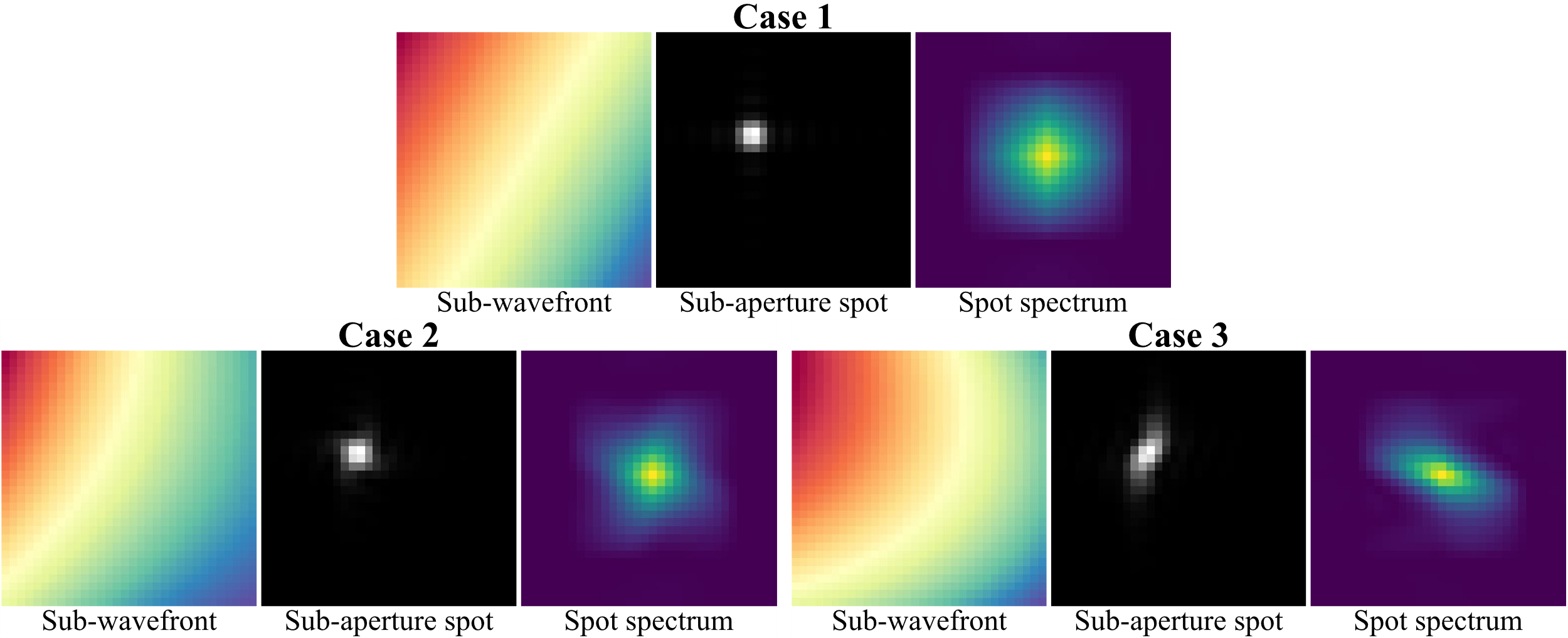

Addressing the common oversight of wavefront sensor imaging physics in existing deep learning models, the team employed Fourier optics principles to leverage the physical prior that spot spectra exhibit anisotropy under spatial undersampling conditions (Fig. 2), and innovatively replaced conventional convolutional kernels with learnable Gabor filters.

These anisotropic Gabor filters demonstrate superior directional selectivity and frequency localization capabilities, enabling FF-Net to effectively decouple high-order aberration features from blurred spot images.

Figure 2 Sub-wavefronts, sub-aperture spot images, and corresponding spot spectra under three conditions

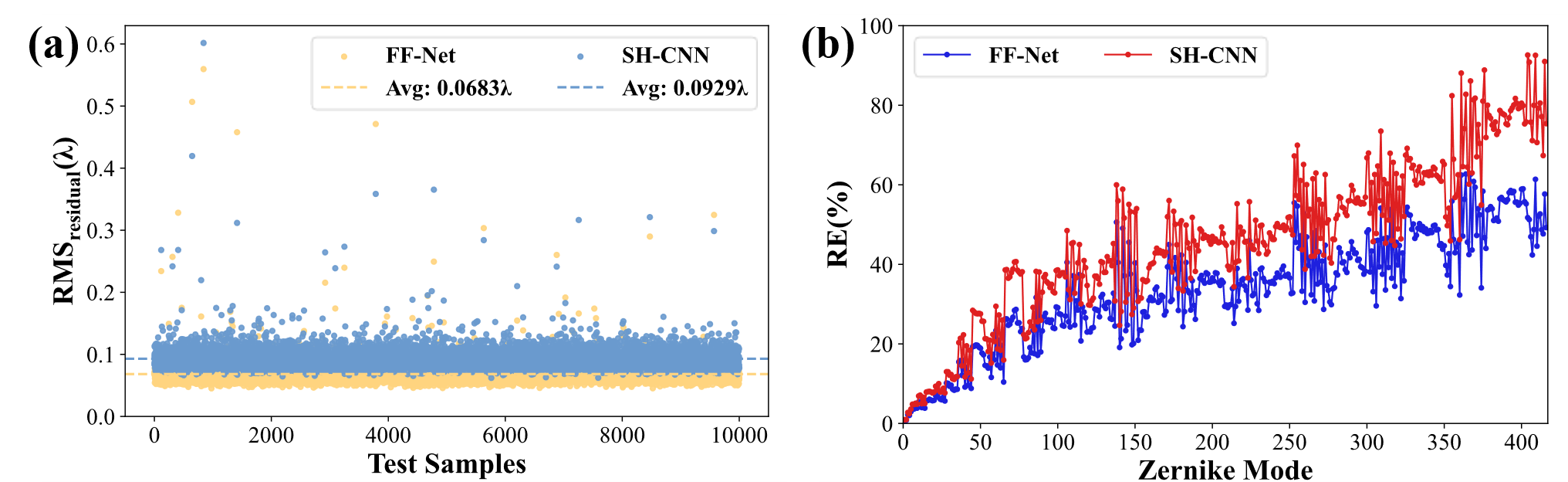

Numerical simulations demonstrate that FF-Net achieves outstanding performance in super-resolution reconstruction tasks (Figure 3).

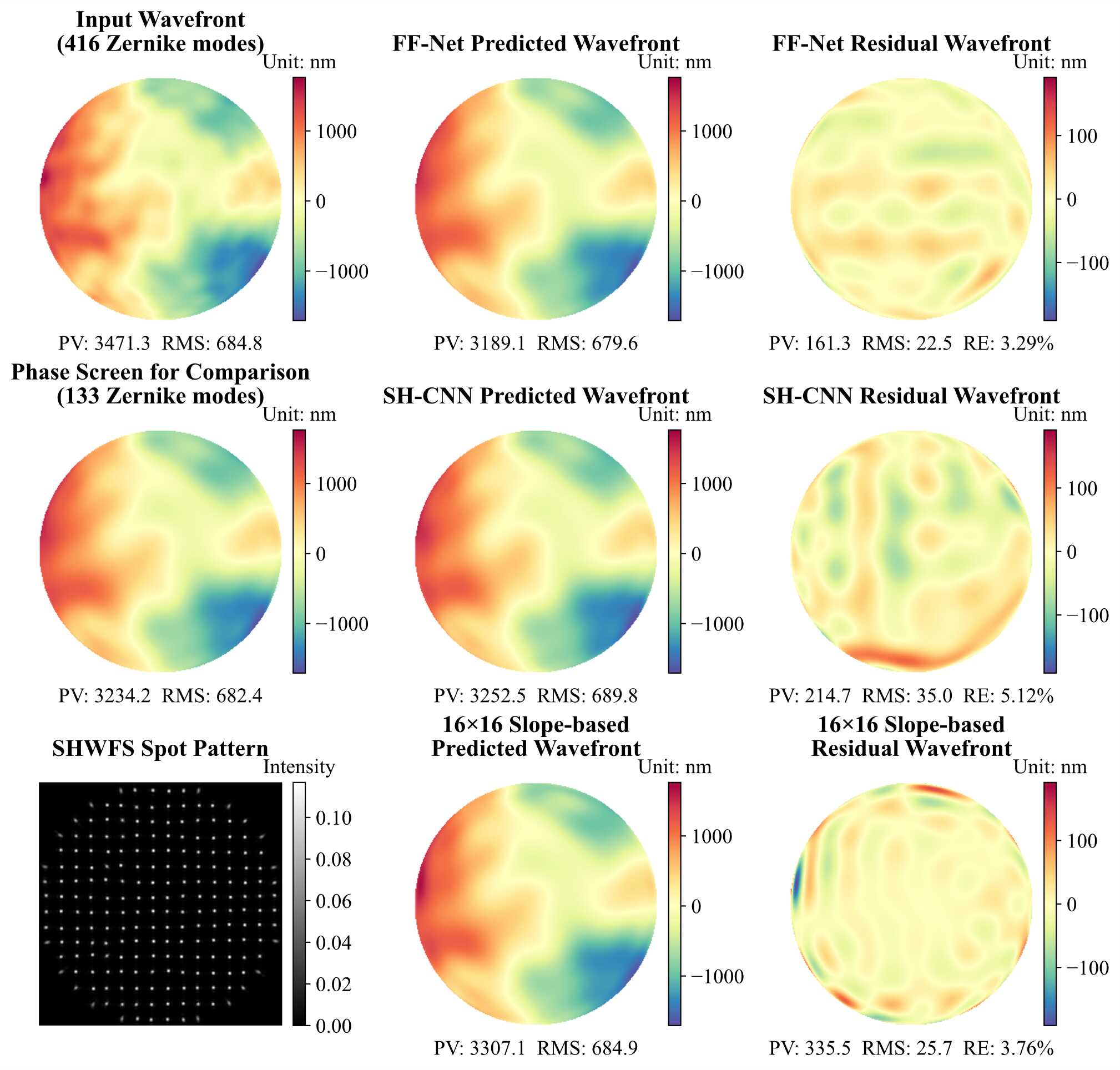

Under spatially undersampled conditions, this method can accurately reconstruct Zernike modes up to 8 times the number of subapertures, and outperforms traditional slope-based methods with 4 times the subaperture density in reconstructing low-order modes (Figure 4).

Through GPU acceleration, FF-Net achieves single-frame inference times below 1 millisecond, meeting the real-time requirements of most astronomical adaptive optics systems.

Figure 3 Comparison of super-resolution wavefront reconstruction performance between FF-Net and SH-CNN on the test dataset. (a) Scatter plot of root-mean-square (RMS) values for residual wavefronts; (b) Line chart of relative errors in single-mode reconstruction.

Figure 4 Comparison of wavefront reconstruction results among FF-Net, SH-CNN and slope-based method: Reconstruction of 133 Zernike modes (Deep learning methods employed 8×8 microlens arrays, while the slope-based method used 16×16 microlens arrays)

This work demonstrates that incorporating physical principles into neural network design provides an effective approach for solving complex optical inversion problems.

The research findings were published in the international optics journal Optics Express under the title "Frequency-domain filtering-based neural network for Shack-Hartmann super-resolution wavefront reconstruction".

Ph.D. candidate Xu Hou from Nanjing Institute of Astronomical Optics & Technology served as the first author, with researcher Heng Zuo as the corresponding author.

This research was supported by the National Key R&D Program of China (2022YFA1603001, 2022YFA1603000) and the National Natural Science Foundation of China (12073053).

Article link: https://doi.org/10.1364/OE.578992